Qwen 3 AI Model: Reasons You Should Care About It

April 30, 2025

Alex - aiToggler Team

Content crafted and reviewed by a human.

Artificial intelligence is moving fast, and Alibaba’s new Qwen3 model is one of the reasons why.

Unlike many closed AI services, Qwen3 is being released with open weights and an Apache 2.0 license, which means anyone can download and run it without cost.

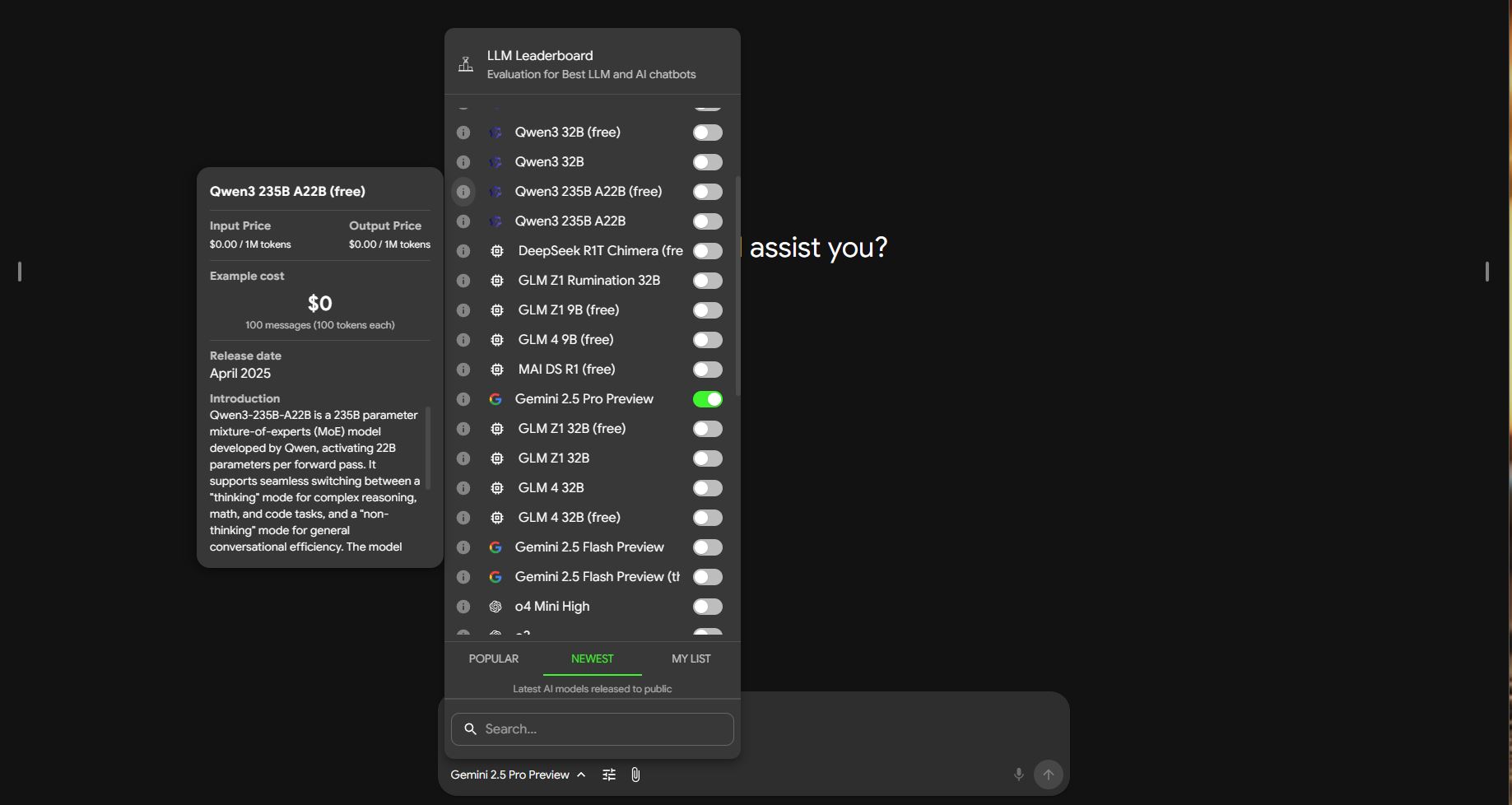

For example, try it right now in our aiToggler app.

The fact that its opensource is a big deal in today’s AI landscape, where open-source progress and global competition matter. A powerful AI model coming from China puts pressure on U.S. companies (like OpenAI and Google) to keep up, and it gives developers more freedom.

In fact, analysts note that “state-of-the-art and open” models like Qwen3 will be widely used by businesses, helping companies build custom tools rather than rely only on closed systems.

At the same time, Alibaba’s Qwen3 also brings AI power to a wide audience. By making these models free to use, it empowers developers, students, and businesses around the world to have options.

For example, a startup can run Qwen3 on its own hardware, and students can study a cutting-edge model without paying subscription fees. The open release of Qwen3 marks a step toward democratizing AI: anyone can inspect the code, fine-tune it for a specific task, or even build new tools on top of it.

Stand-Out Technical Features of Qwen3

Alibaba’s Qwen3 series includes eight models of different sizes, from tiny (0.6 billion parameters) to huge (235 billion parameters).

Some of these models use a special design called Mixture-of-Experts (MoE). In an MoE model, only a small set of the model’s “experts” are active at once, so the model can be very large (235B total parameters) while using as little as possible at a time (only 22B active).

This makes Qwen3 both powerful and efficient: it can match bigger models even though it “pays attention” with fewer active parts.

All Qwen3 models share a transformer-based architecture (the same general type as GPT), but with modern tweaks for speed and scale (for example, they are optimized to use fast attention mechanisms).

A key innovation is the hybrid “thinking” mode.

Qwen3 can run in two modes:

- a slow, careful mode for hard problems

- and a fast mode for simple queries

Users can choose whether the model should “think deeply” or answer quickly. This flexibility means Qwen3 can do things like check its own reasoning step-by-step when needed, or zip out a quick answer when you want speed.

Another standout feature is the huge context window.

The largest Qwen3 models can handle 128K tokens at once – that’s roughly 192 pages of text in a single prompt (Smaller Qwen3 models handle 32K tokens.)

This is far more text than most non-mainstream AIs can process at once. Such a big context window lets Qwen3 remember long documents, conversations, or even entire books, which makes it very useful for tasks that need a lot of background context.

Qwen3 also supports 119 languages and dialects, giving it strong multilingual and translation abilities. From English and Chinese to Arabic, Swahili, and dozens more, Qwen3 is trained to understand and generate text in many tongues.

This makes it a versatile choice for international applications.

In summary, the Qwen3 family combines:

- A wide range of model sizes (0.6B to 235B) to fit different needs.

- Mixture-of-Experts design for efficiency and power.

- Hybrid reasoning modes (quick chat or deep thought).

- Respectable context lengths (up to 128K tokens) for long inputs.

- Multilingual training covering 100+ languages.

These technical features mean Qwen3 is both cutting-edge and practical for developers.

Real-World Use Cases

What can you do with Qwen3? Its creators and early users highlight several promising applications:

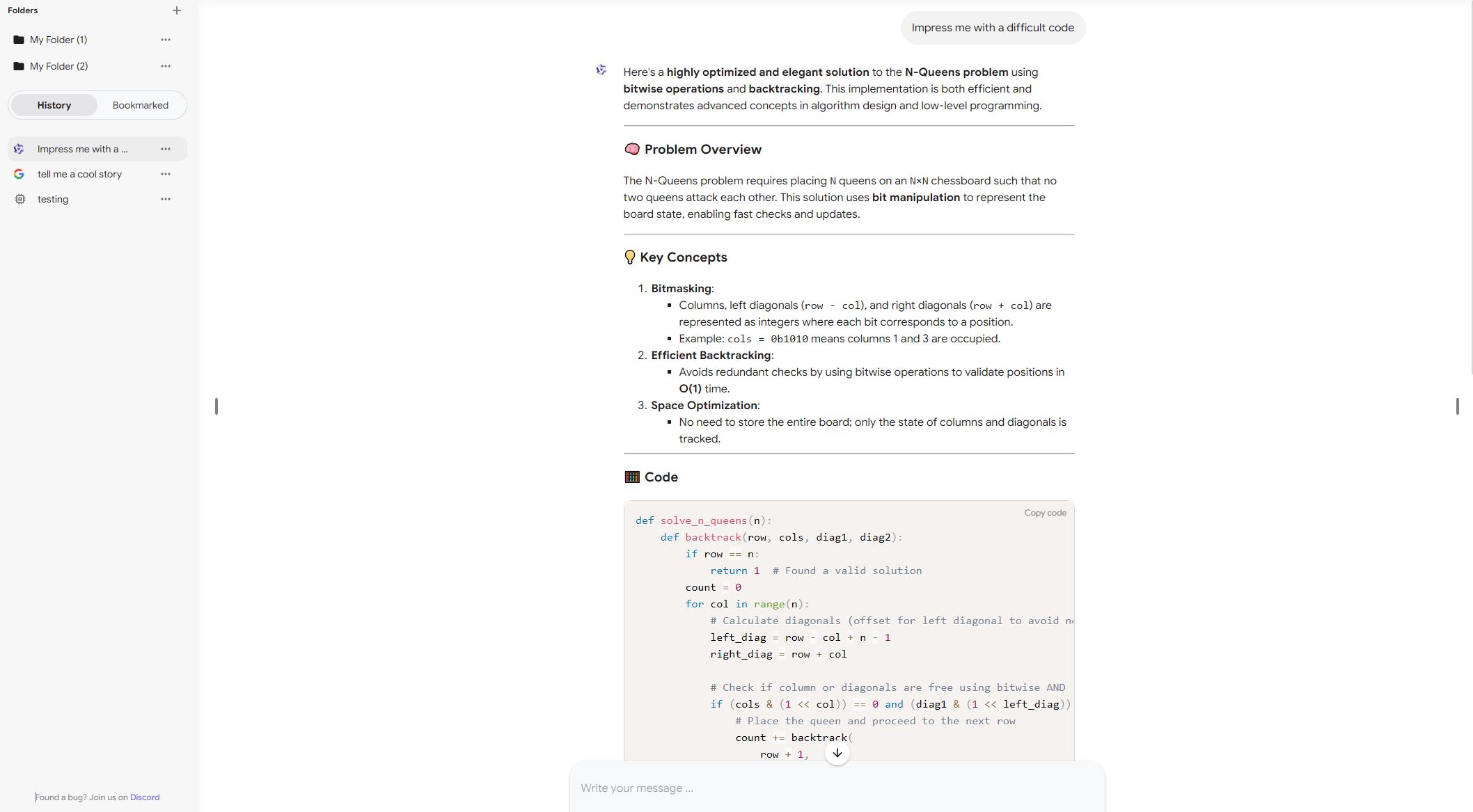

Coding Assistants: Qwen3 is optimized for writing code. Alibaba even released a “Qwen Codex” integration (for example, the “通义灵码” coding agent in popular IDEs) based on Qwen3. In practice, Qwen3 can help write programs, explain code, or debug. In coding contests it already matches or beats well-known AI tools: the top Qwen3 model narrowly outperformed OpenAI’s GPT-4 (o3-mini) and Google Gemini 2.5 Pro on Codeforces coding challenges. This means Qwen3 can serve as a powerful programming helper.

Chatbots and Agents: Because of its “thinking” mode and tool-calling ability, Qwen3 is good at agent-like tasks. It can call external tools, APIs, or databases as part of its reasoning. For example, Alibaba reports that Qwen3 “excels” at using tools and following complex instructions. This makes it useful for building intelligent assistants, search bots, or customer service agents that need to do multi-step reasoning or fetch data from other systems.

Multilingual Translation and Chat: With support for 119 languages, Qwen3 can be used in translation apps or global chatbots. Businesses that need AI in multiple regions (for product descriptions, customer interaction, etc.) can leverage Qwen3’s language range.

General-Purpose Chat: Of course, Qwen3 can be the core of chatbots or virtual assistants. Alibaba has a public Qwen Chat interface, but companies can also host their own chatbot powered by Qwen3. Because it can switch to a fast mode, it can handle everyday queries quickly, yet dive into detailed reasoning if needed.

Educational and Research Tools: Its factual reasoning abilities (math and logic) and open access make Qwen3 a candidate for educational apps, tutoring systems, or research projects where transparency is important.

In short, Qwen3 is designed to power a wide variety of AI tools: from coding plugins and question-answering bots to multilingual translators and smart assistants.

Its combination of deep reasoning and practical flexibility makes it a general-purpose engine for developers.

Performance and Benchmarks

Now let’s get to the meat of it.

Alibaba’s team reports that Qwen3 achieves top-tier results on many standard benchmarks.

In simple terms, Qwen3 is competitive with the best closed-source models from OpenAI, Google, and others.

For example:

- On coding benchmarks, the flagship Qwen3-235B model “just beats” OpenAI’s GPT-4o-mini (known as o3-mini) and Google’s Gemini 2.5 Pro in Codeforces programming contests. It also outperforms GPT-4o-mini on a tough math contest (the AIME test). Even the largest public Qwen3 model (32B) surpasses OpenAI’s older o1 model on coding exams like LiveCodeBench.

- On general knowledge and reasoning tasks (like MMLU or similar tests), Qwen3 is also impressive. The official Qwen blog says the 235B model “achieves competitive results” when compared to other top models such as DeepSeek-R1, GPT-4, and Gemini. Independent analyses report that Qwen3 outperforms similarly-sized open models (like LLaMA-4/Maverick) in tasks like general-knowledge questions and reasoning problems.

- Efficiency: Thanks to the MoE design, even smaller Qwen3 models deliver big-model performance. For instance, Qwen3-32B (dense) performs as well as much larger previous models (like Qwen2.5-72B) on many tasks. And the 30B MoE model (with only 3B active parameters) can beat older 32B models on reasoning tasks, using far less compute. This means businesses can pick a smaller Qwen3 and still get top results, saving cost.

Overall, benchmarks and comparisons place Qwen3 at or near the top of the leaderboard for open models (see the leaderboard on different categories here). On many metrics it rivals GPT-4 and Claude and even outperforms Gemini in code and math.

Such social proof from independent testing highlights that Qwen3 is not just big, but smart.

Summary

In summary, Alibaba’s Qwen3 is one to keep an eye out because it pushes the AI field forward in multiple ways.

It offers an open alternative to closed AI giants, supports developers worldwide, and delivers competitive performance on many tasks.

Whether you’re a coder looking for an AI assistant, a company building a smart agent, or a student learning about AI, Qwen3 has something to offer.

In today’s AI race, Qwen3 shows that powerful models can be accessible to everyone, which is a trend well worth paying attention to.

To try it out right now for free, go to aiToggler’s app and even compare it with any other 300+ AI models using parallel chat feature!